|

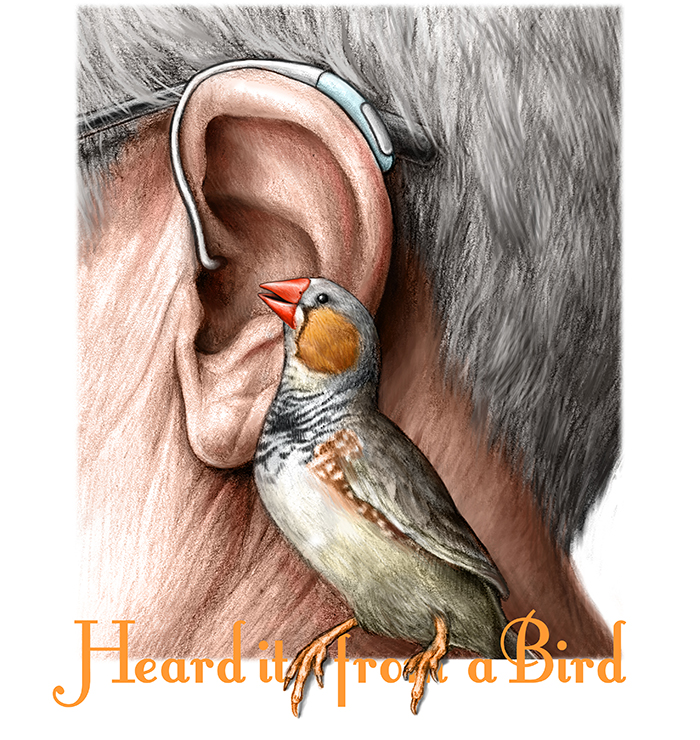

| Illustration: Melissa Logies |

Zebra finches tune into their mates’ songs even when it’s noisy. Chris Cesare examines how this skill might lead to better hearing aids. Illustrated by Melissa Logies.

Zebra finches mate for life. They also rarely shut up. “That combination is sweet in terms of studying communication,” says Frederic Theunissen, a neuroscientist at the University of California, Berkeley. “It's just like us: We're social and we're vocal.”

Most of the songbirds’ sounds are simple squeaks. Mates stay in touch, newborns ask for food, and the whole colony raises the alarm when danger is near. But researchers have long been more interested in the birds’ songs—complex vocalizations that males learn at a young age and use during courtship. Theunissen studies how finch brains recognize those songs amidst the clamor of other sounds.

Theunissen’s team is unraveling the complex path a sound takes from utterance to understanding. Along the way, the scientists have discovered zebra finch neurons with an intriguing ability: They respond to finch songs and ignore everything else. It’s probably what our brains do when we pluck speech out of a noisy background.

Although his lab focuses on basic science, Theunissen’s findings have led to a new computer algorithm to reduce noise. This advance could help audiologists fine-tune hearing aids for people. “We looked around to see what other algorithms existed," Theunissen says. "But nothing had quite the flavor of ours, which is biologically inspired.”

From pips to chirps

What we experience as sound arises from tiny wobbles in air pressure that travel like waves along an invisible Slinky. We create these fluctuations when our vocal cords vibrate, knocking molecules around. The faster the air shakes, the higher the frequency, or pitch, of the sound. Similar mechanics help us hear: Air beats on our eardrums, and small bones transfer the vibrations to our inner ears. There, they undulate tiny hairs, sparking electrical signals that pulse into the brain.

Birds use the same basic mechanism, but early research on the acoustic abilities of bird brains was rudimentary. “All of the work was done with tone peeps—beep! boop!—very short tones at one frequency,” Theunissen says. “Or with synthetic sounds that were white noise—chshhhhhh.”

Like the birds he studies, Theunissen is energetic. He fidgets with a coffee cup while seated and occasionally emits a nervous laugh when he describes his work. He spends more time in the office that houses his postdoctoral researchers and graduate students than in his own. He even keeps a desk there, adorned with greeting cards featuring birds.

|

Photo: Chris Cesare |

UC Berkeley neuroscientist Frederic Theunissen with one of his team's zebra finches.

|

|

By playing tones for birds and recording their brains' responses, researchers determined which neurons were sensitive to different frequencies. They could also predict the reaction to multiple tones by adding the individual responses. But that didn’t capture the whole picture. “It's a very good approach to figure out the components of the auditory system,” Theunissen says. “But you have to ask if it's relevant. What happens if you play a natural sound?”

A picture of a sound-processing hierarchy emerged in the 1980s when researchers discovered neurons in birds tuned to birdsong. The low-lying parts of the hierarchy recognize simple features like frequency, while the higher-ups respond to more complex structures like rhythm.

It wasn’t surprising to find neurons tuned to birdsong. More than a decade earlier, researchers working with bats had discovered neurons critical to echolocation. Parts of a bat’s brain responded only to a sound played twice in succession, and different regions looked for varying delays between the sounds. This architecture reflected the bats' reliance on tracking sound echoes to get around.

Theunissen pioneered efforts to study how bird brains respond to birdsong. A paper he published in 2000 was among the first to report on experiments probing zebra finch neurons using the finches’ rhythmic chirps. Stimulating the birds with natural sounds led to better predictions of brain activity than those derived from tonal pips and peeps.

Songbirds are especially well-suited for studying this acoustic processing, says neuroscientist Tim Gentner of UC San Diego. “There’s really no other [animal] that combines behavioral and acoustic complexity with the ability to do invasive experiments,” Gentner says. Almost all songbird research was about song production until Theunissen developed rigorous ways to use natural sounds, he notes.

Podcast produced by Chris Cesare. Zebra finch sounds provided courtesy of Julie Elie. Click on image to play.

To be successful communicators, birds, bats and humans must recognize the important features of the sounds they care about. Those distinguishing characteristics come down to a sound’s timbre—the qualities it has apart from frequency or loudness. “If I go ‘ahhh’ and ‘ehhh,’ the pitch is the same,” Theunissen says. “I'm using the same note. The intensity is the same, and the length is the same. It sounds different to you because of the timbre of the sounds.”

But for Theunissen, even the work with natural sounds was too artificial. As the air conditioning unit spools up overhead in a conference room next to his office, he says, “It's as if I were studying speech perception and you were always in a sound booth. There's no fan, there's no echoes, and there's only one speaker who speaks very clearly and slowly.” That doesn’t happen outside of the lab. Since birds must pick out songs or calls from among many other noises, Theunissen went searching for the neurons that made it possible.

Turn down that noise

About 50 zebra finches, none much bigger than a shot glass, dart to and fro in Theunissen’s basement aviary at UC Berkeley. They alight on small beams before fluttering away to the other side of the hutch. All the while they emit tiny twitters that sound like squeaky toys. Each bird has a red-orange beak, but only the males have bright cheek feathers to match and red sides speckled with white. Every so often a bird perches, raises its beak and breaks into song. “Their beak movements are really, really subtle,” says Tyler Lee, a neuroscience Ph.D. student. “I have a hell of a time trying to find out which one is singing.”

|

Photo: Chris Cesare |

| Dozens of zebra finches live in a research aviary at UC Berkeley. |

|

Males learn their tune from their father and brothers, and after about three months it’s locked in for life. Most songs last less than three seconds. “They have these introductory notes of variable length, but all very fast,” Lee says. “Then they'll have more pitchy notes after that.” To the untrained human ear, the individual songs sound the same, but to zebra finches they sound as different as Taylor Swift and Cher.

To search for neurons tuned to birdsong, Theunissen, Lee and graduate student Channing Moore implanted thin wires into the brains of four zebra finches. They recorded electrical signals while playing the songs of 40 unfamiliar finches with and without noise. They also collected data for half a second before and after the songs to gauge the neurons’ normal activity. A miniature motor moved the probes in micron-sized steps, letting the scientists scan for responses to sounds. The grueling work required 12-hour recording sessions and created gigabytes of data. They crunched some of it in front of Viewsonic computer monitors—the brand with songbirds on the bevel.

In a 2013 paper, the trio described the results. Neurons high up in the chain of the birds’ auditory system fired in the same way, regardless of noise. They acted like filters, responding only to sounds with the same characteristics as zebra finch songs. These triggering characteristics are called their receptive field. “It's what we think of as the neuron's view on the sound,” Lee says. “So it's looking for this particular feature in the sound, and when it sees it, it increases its response.”

Reconstructing these receptive fields gave the team a model of how the neurons perceive the external world. But Theunissen and Lee saw a further opportunity. If the neurons were just looking for certain features in the sound, couldn’t they program a computer to do the same thing? And why stop at birdsong?

Silicon neurons

It’s easy to filter sound by breaking it up into its frequencies and allowing only certain frequencies through. The mechanical pieces in our ears do this, to some extent; it’s why we can’t hear frequencies that are too high or too low.

But that simple filter isn't good enough to pick out birdsong or human voices. Often, noise washes out the sounds of interest. The key to programming a computer to behave like the neurons was to treat sound not simply as a collection of frequencies, but as a richer soundscape that captures rhythm and other features. It was like making the jump from a normal map to a topographic map that also shows elevation. Neuroscientists believe that this representation, called a spectrogram, is how the brain sees sound.

To start, Theunissen and Lee programmed a computer to look for the same acoustic features that the finch neurons recognize. Then, they presented a spectrogram of noisy birdsong to these digital representations of neurons. Imbued with their own receptive fields, they scanned the signal and picked out only the features they liked. Out popped a match to the clean song.

But they didn’t stop there. If one collection of receptive fields picked out birdsong, maybe a different set could strip the noise away from human speech. After all, the team could represent both speech and song by spectrograms. “So we designed an algorithm that learns whatever features work best for extracting speech from noise,” Lee says.

|

Photo: Chris Cesare |

| Neuroscientist Frederic Theunissen (left) and his graduate student, Tyler Lee. |

|

Noise-canceling headphones work in a similar way but with one big difference: the noise is known. The headphones listen for external sounds and pipe in a signal to wipe away the noise. In contrast, Theunissen and Lee don’t know the noise ahead of time. They only know that different noises have different features, like background babble at a conference or the din of traffic on the street.

They started with a set of receptive fields representing artificial neurons. These had random affinities for sound different than the regular patterns associated with speech. The computer analyzed a set of noisy voices, at first picking out random pieces of the spectrogram. The output sounded nothing like the pure speech. But since Theunissen and Lee knew what the clean signal should be, they could track which artificial neurons were doing better and which were doing worse. They could nudge the set of artificial neurons, bit by bit, toward liking the right features. After repeating this process dozens of times, they were left with a collection of programmed and sharpened neurons that could tune out the noise.

Having trained the neurons on test cases, Theunissen and Lee tested the software on unfamiliar speech embedded in noise. They found it could pick out voices it hadn’t been trained on.

When they looked for other noise-reduction algorithms, they found many that performed well—but none had the same biological bent. They contacted a colleague interested in noise reduction. “We said, ‘Hey, we have this cool algorithm,’” Theunissen recalls. “‘Maybe this is something that could be implemented in a hearing aid.’”

Borrowing again from biology, Lee added prediction to the algorithm. Since speech is rich with repeating structures, the brain doesn’t need to listen for the full sound of a vowel or consonant. A few milliseconds out of a hundred or so are enough to predict what the rest of the sound will be. “The brain has to function in real time,” Lee says. “It can't buffer several seconds and then go back and figure things out.”

Neither, it turns out, can a hearing aid.

Testing it out

About 1,500 feet from the Berkeley aviary, as the crow flies, Sridhar Kalluri sits in his office at the Starkey Hearing Research Center. Starkey, the country’s largest hearing aid manufacturer, set up this lab to assess hardware and software for the devices. “Typically, for hearing aid algorithms you ask ‘Did it improve your understanding of speech?’” Kalluri says. “That's the biggest thing for people.”

But it’s not the only thing. Imagine reading while listening to a baseball game. If you’re cozying up with a pop-culture magazine, you’ll remember more about the game than if you’re scrutinizing a scientific paper. The latter requires more concentration. Scientists believe our brains have a limited amount to give.

Starkey tested the effects of noise reduction in 2009. Together with a different group of researchers from UC Berkeley, they reported an unexpected benefit from an algorithm similar to Theunissen and Lee’s. It didn’t make speech more intelligible, but it did reduce the brainpower required to understand it. In essence, noise reduction was the equivalent of converting a scientific paper into a magazine.

Extracting Speech from Noise

A spectrogram is a picture of sound that provides a bird's eye view of rhythm and pitch. Click below to see and hear how a novel computer algorithm developed at UC Berkeley changes the spectrogram of a noisy spoken sentence.

Softer

Louder

Graphic: Chris Cesare

Such reductions in effort could make all the difference for the hearing impaired, who struggle in noisy rooms even with a hearing aid. “I live in a condo building where we have a lounge, and we serve dinner a couple times a week,” says Yorkman Lowe, 64, who wears his hearing aids full-time and participates in tests at Starkey Research. “I really have trouble hearing people next to me, and yet I hear people three or four seats down. At these group dinners I've realized I'm not going to have a conversation.”

Kalluri is working with Theunissen and Lee to tweak their software. But bringing the new algorithm to consumer devices will require an engineering balancing act. Hearing aids are small, with tiny batteries to match. This constrains the size and power of the computer chips that run the algorithms. It’s not insurmountable, though. As Kalluri notes, “What was a supercomputer 50 years ago is in a hearing aid now.”

People with normal hearing began testing the algorithm at Starkey in spring 2015. If the results are promising, Theunissen, Lee and Kalluri will move on to tests with the hearing impaired. Eventually, Kalluri says, the algorithm must graduate beyond simple tests. To attract serious attention from a company, Theunissen and Lee will have to show that their algorithm can run in real time on a hearing aid.

Ultimately, Theunissen and Lee’s algorithm could find its way into commercial devices. That’s welcome news for Charles Gebhardt, 67, a semi-retired community college counselor who also participates in Starkey tests. “I have to sit down and be very discerning and thoughtful and focused on the people who come in to see me,” Gebhardt says. “Sometimes they are soft-spoken. Hearing aids help quite a bit.”

In addition to small computers and batteries, another constraint confronts noise-reduction algorithms like Theunissen and Lee’s. “They're always going to be somewhat limited because in real environments, the noise is speech,” says Todd Ricketts, who directs a hearing aid research lab at Vanderbilt University Medical Center. “But there’s certainly the possibility that we'll have algorithms that improve speech recognition in hearing-impaired patients.”

In fact, Lee is now working on training the algorithm to pick out one voice in the presence of another. This work could enhance the viability of the algorithm in settings dominated by the chatter of other humans. It might also shed light on the cocktail party effect, the ability we have to focus our attention on one speaker among many. After training the algorithm on features of a particular voice, it could be specially tuned to the lilt of a loved one, for instance.

Throughout all of these manipulations, maintaining the qualities of the original sound is a steep challenge. “One of the problems with doing noise reduction is that you're also going to distort the signal,” Theunissen says. “We're very picky. Even if we can understand them better, we don't want to listen to our mates sounding all weird.”

© 2015 Chris Cesare / UC Santa Cruz Science Communication Program

Top

Biographies

Chris Cesare

B.S. (physics) University of California, Los Angeles

Ph.D. (physics) University of New Mexico

Internship: Nature

I made my dad teach me how to play a computer game when I was five. Eventually, the insides of that mysterious gray box grabbed my attention, and playing turned to tinkering. I had to know how it worked.

Physics taught me about basic digital building blocks and how thermodynamics demands that computers function as space heaters. When I came across quantum mechanics—the ironclad laws of the very small—I was smitten. In graduate school I studied how to harness these quantum rules to make new kinds of computers. But as my focus narrowed, my curiosity expanded, and my devotion to a scientific career became uncertain.

Now I will satisfy that curiosity by telling science stories. No longer focused solely on quantum physics, I will probe what we know and how we know it.

Chris Cesare's website

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Melissa Logies

B.F.A. (biomedical art) Cleveland Institute of Art

Internships: California Academy of Sciences, Smithsonian Institution National Museum of Natural History

Melissa Logies is a scientific illustrator from Cleveland, Ohio. She spent her childhood filling sketchbooks with her observations of plants and animals, intrigued by the beauty and complexity of nature. In college, she discovered that art and biology could be combined into a career and majored in biomedical art, a program focusing on medical illustration. She is thrilled to complete her graduate education in natural science illustration at CSUMB and plans to pursue a career in botanical and entomological illustration following an internship at the National Museum of Natural History.

Melissa Logies's website

Top |