|

| Illustration: Natalia Wilkins |

| Could engineers who like to share take a slice out of the market for robot-assisted surgery? Sarah Jane Keller probes the issue. Illustrated by Natalia Wilkins. |

|

There are more nerve endings in your fingertips than anywhere else in your body. Your hands and fingers have enough articulation to move in 30 different ways. For a long time, machines had nothing on our hands.

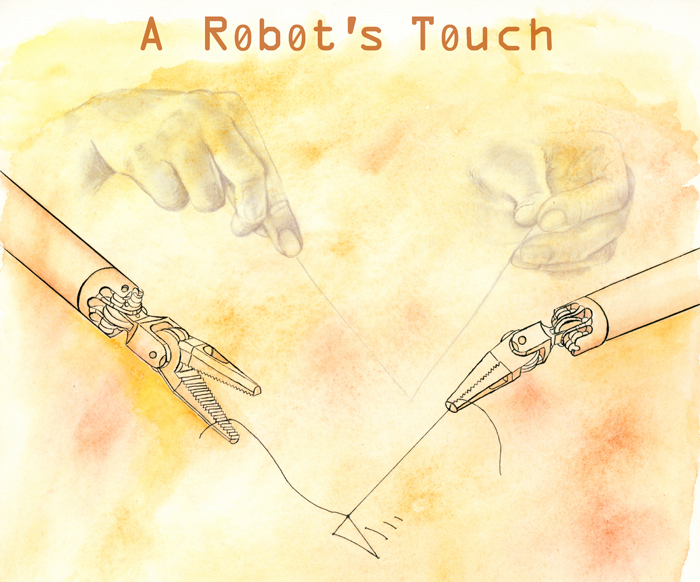

This was especially true in surgery, where skilled handiwork is still revered. But now, some surgeons sit behind the controls of a machine and operate without touching their patients. Tiny surgical instruments attached to a robot are flexible beyond human hands and wrists, a camera magnifies organs, and computer algorithms remove tremors that are inevitable when pure tendons and muscles hold instruments for hours.

The robotic system used in many hospitals is another tool to extend the surgeon’s reach. It enhances some aspects of the surgeon’s hands, but it also denies them an exquisite sense of touch.

Many doctors and patients already swear by the robot for urological and gynecological surgeries, while others are waiting for the next generation of surgical tools. Jacob Rosen, director of the bionics lab at UC Santa Cruz, is working on a new robotic approach—one that could restore touch to the craft.

Rosen’s research system, called the Raven, is lighter, smaller, and less expensive than today’s surgical robots. It’s also the first to run on open-source software, a radical departure from the closely guarded technology now in hospitals. After 11 years of minimally invasive abdominal surgery with robots, only one company controls the hardware and software used in all U.S. robot-assisted surgeries.

By freely sharing progress with other research teams, Rosen and his collaborators at the University of Washington hope to bring an engineer’s touch to the operating room.

da Vinci’s first cut

Going under the knife today is a sharp contrast to the big incisions—called open surgeries—made for most of history. The scalpel loomed large in the minds of patients, because it’s what surgeons used to make long, slow-healing incisions. Imagining that gloved hand holding a blade next to your glossy organs and arteries makes you hope it comes with a steady touch.

By the late 1980s, surgeons could get under your skin, muscle, and fat without cutting you open or even touching your organs. They began running a camera, a light source, and tiny tools through small openings in the body—a method known as minimally invasive surgery. It’s called laparoscopy if it’s done in the pelvis or abdomen.

“A long time ago, it was the larger the incision, the greater the surgeon,” Rosen says. “Now it’s the smaller the incision, the greater the surgeon.” Tunneling tools through a port plugged into a keyhole-sized incision can cut down patient recovery time, bleeding, infections, and pain.

Surgeons embraced laparoscopy because it can reduce trauma, but they sacrificed dexterity. A traditional laparoscopic tool is about the size of a chopstick, with the same flexibility. The human wrist, on the other hand, can move in three different ways.

Instruments at the end of robotic arms gave surgeons seven ways of moving that are similar to the human arm and hand. The systems—which surgeons fully control from a console—were a natural extension of laparoscopy, where a camera inside the patient replaces the surgeon’s eyes.

The da Vinci robot, owned by Intuitive Surgical, Inc. of Sunnyvale, California, is the only system used for laparoscopic surgery in about 1,500 hospitals today. It began 20 years ago when Richard Satava, now a professor of surgery at the University of Washington, traveled from his post at the former Fort Ord Army base near Monterey to work with engineers in Silicon Valley. Their goal was a robotic system to sew together pencil-sized nerves.

Satava once dreamed of performing surgery in space. After leaving Fort Ord in 1992, he managed the advanced biomedical technology program at the Defense Advanced Research Projects Agency (DARPA) and funded the remote surgery project that led to da Vinci. DARPA, charged with “creating and preventing strategic surprise,” has had a hand in many university or industrial research programs with potential military applications. The U.S. Food and Drug Administration cleared da Vinci for commercial use in 2000.

Surgeons use the nearly half-ton robot and its four towering arms in cardiac, chest, urologic, gynecologic, pediatric, general, and certain kinds of facial and neck surgeries. Eighty percent of prostatectomies—prostate removals—are now done with da Vinci. Overall, its use has quadrupled in the last four years.

Satava, who has no financial ties to Intuitive Surgical, explains his view of the system’s popularity: “The da Vinci robot can filter out all [hand] tremor,” he says. “There is no human on this planet—not a single human—that is able to have an accuracy of greater than 100 microns. Now, any surgeon can sit down and do 10-micron or 20-micron accuracy with no tremor, no shaking. You magnify your vision so you can see five or ten times closer and more accurately than you can with your own naked eyes.

“What we have now is the ability to extend ourselves far beyond what a human was ever designed for or evolved into being.”

Judging the surgeon’s art

Ten years ago, Jacob Rosen tried to extend his biomedical research by working with da Vinci’s manufacturer to collect data, but the company refused his request.

Rosen doesn’t blame Intuitive Surgical. They were protecting their property, he says, and as a private company they don’t owe anything to the research community. “I don’t think they will regret it, because they are a multi-billion dollar company right now and we are not even challenging their market,” he says. “But I think we are challenging them intellectually.”

Photo: Sarah Jane Keller

Photo: Sarah Jane Keller |

Engineer Jacob Rosen in the Bionics Lab at UC Santa Cruz. (Click image to see larger version.) |

|

|

Rosen, who once served as an officer studying human-machine interfaces in the Israel Defense Forces, says he learned early on that “engineering is all about positively affecting the human race.”

Mika Sinanan, a University of Washington professor of surgery, calls Rosen a great collaborator. In 1997, Sinanan and Rosen began working with Blake Hannaford, director of the University of Washington Biorobotics Laboratory, to find better ways to train laparoscopic surgeons. The group zeroed in on objective measures of surgical skill.

Rosen designed an arm-like sensor system, called Blue Dragon and later Red Dragon, to grasp surgical tools and record the motions surgeons make during operations. In one study, Blue Dragon recorded the movements and forces surgeons applied while suturing pig cadavers.

The suture—threading a curved needle through a port into the patient’s abdomen, rejoining sliced tissue, and deftly tying a knot—is considered a benchmark of surgical handiwork. It's one of the most difficult laparoscopic techniques. Less-than-masterful skill could mean puncturing organs with unsteady movements.

Rosen analyzed the surgical kinematics and dynamics collected by Blue Dragon with a special mathematical model: one normally used in speech recognition, to find hidden patterns in human language. In the operating room, the model uncovered the language of surgical motion at different levels of experience. “What evolved out of the research is the idea that surgery is like a human spoken language, with a specific number of words with different pronunciations,” Rosen says. “Each word is a different combination of interactions with the tool, and the tools, velocity, and forces are the different pronunciations.”

The actions surgeons made while operating on their porcine patients translated into 16 different maneuvers, such as “grasping” tissue, “pushing” tools downward, and “sweeping” tissue in a circular motion. Less-experienced surgeons hesitated to damage delicate tissues by pressing too hard, so they were less consistent in their movements. Experienced surgeons made tight maneuvers and consistently applied a lot of force with some motions and less during others. Sinanan and Rosen compared the patterns of each surgeon to his or her peers, revealing whether they were performing at the expected level of “linguistic sophistication” or surgical proficiency.

Next, Rosen and his colleagues wanted to collect similar data from a surgical robot. A decade ago, the da Vinci system was just starting to appear in hospitals. Rosen thought they could use the robot’s data port to gather fine details on real surgical movements. That's when Intuitive Surgical rejected his request.

Rosen explains why they couldn’t move their robotics research forward without da Vinci’s real-world data: “You can develop beautiful algorithms, but they will always be left in this beautiful place of simulation,” he says. “Reality is harsh.” They needed a physical system.

That system became the Raven: an open platform where Rosen and his colleagues could test their ideas and access “every single element of the system.”

“I think we have it now, but it took us ten years to do it,” Rosen says.

Freeing the Ravens

After capturing motion from surgery on animals, Rosen had raw material for creating a surgical robot—a trove of data describing the precise angles, forces, and torques of the surgeon’s art. Working with Hannaford, Rosen generated 5,476 possible designs. They had to choose one that would yield the smallest robot while meeting all requirements of surgical motion. Some of those goals are contradictory, but Rosen calls engineering “the art of compromise.” Using their operating-room data, they applied a mathematical give-and-take, called optimization, to arrive at the most satisfying robot geometry.

Photo: Sarah Jane Keller

Photo: Sarah Jane Keller |

Jacob Rosen and the Raven robotic surgical system at UC Santa Cruz. (Click on image to see larger version.) |

|

|

The result became the Raven. With its two narrow, cabled arms hinging out and over either side of the operating table, it looks light enough to take flight. It’s not surprising that Rosen and Hannaford designed it to travel long distances and into harsh environments.

Like da Vinci, the Raven was hatched from military funding for surgery where a battlefield, an ocean, or thousands of miles separate the surgeon’s hand from the patient’s body. Rosen and Hannaford first used the Raven to show the possibility of operating in an underwater research center, using simulated human bodies. They also took it off the grid into the California desert, where an aerial drone provided wireless communication between the surgeon’s operating console and the robot.

A major challenge operating on patients an ocean away is the time delay as data travels between the surgeon and the patient. Automation could overcome the lag, but the idea is a long way from surgical practice. Lack of standardized hardware, software, and communications has been a problem, Rosen says.

He hopes the Raven system will change that by giving many research teams one platform over which to share progress. Early in 2012, Rosen and Hannaford shipped copies of the newest Raven system to seven university teams, all working on medical robotics.

Restoring the surgeon’s touch

From Rosen’s perspective as an engineer, “once you introduce a surgical robot, you want to reproduce all the senses and capabilities as if you were touching the tissue with your fingers.”

Restoring touch, also called haptics, is a 30-year-old field. Now, electrical engineer Howard Chizeck at the University of Washington is taking a new approach. Rather than sensing forces directly from a surgeon’s tools or from resistance in motors, he and his students detect them with a camera whose pixels contain information about color and an object’s depth.

The trick is converting the visual and depth information from the camera into information about forces, then sending it to the user through a two-way joystick-like device. Chizeck and his student found a way to do that in late 2010 by using hacked data from Microsoft’s Kinect, the motion-sensing game controller for Xbox 360. Now, the BioRobotics Laboratory has an affordable and research-ready 3-D depth camera.

In one demonstration, Chizeck directs his controller to place a red dot on the image of a piece of paper that sits across the room. When a person on the other end lifts the paper, the red dot moves too. As if possessed, Chizeck’s joystick jumps, without anyone touching it. Chizeck says the red dot, called the haptic interaction point, is “a ghostly finger in the virtual world that’s touching the physical space.” In one demonstration, Chizeck directs his controller to place a red dot on the image of a piece of paper that sits across the room. When a person on the other end lifts the paper, the red dot moves too. As if possessed, Chizeck’s joystick jumps, without anyone touching it. Chizeck says the red dot, called the haptic interaction point, is “a ghostly finger in the virtual world that’s touching the physical space.”

His group is now integrating the Kinect camera with the Raven system. The joystick moves the robot and lets the user “feel” objects. Based on images from the Kinect camera, the computer provides a virtual world where a surface, the robot, and the human hand can interact, although they are physically removed. The forces sent through the controller to the user are based on algorithms written by Chizeck’s team to calculate how the Kinect image deforms in response to the robot.

In the future, the ghostly finger could encounter “virtual fixtures” that would help a surgeon feel a path for cutting, says Chizeck. If a surgeon veers off course, the fixture would steer him or her back with a push through the joystick.

“I think it will help prevent mistakes, make surgery easier, and make good surgeons faster,” says Chizeck.

Touchy feelings about risk

Current da Vinci systems include basic haptics. But for Martin Makary, a laparoscopic surgeon from the John Hopkins University Medical Center and author of a book on healthcare transparency, the loss of feeling is palpable.

He calls restoring human touch in surgical robotics “one of the great Achilles' heels of robotic surgery.”

Makary cites several severe injuries during da Vinci surgery, first reported by the Wall Street Journal in 2010—including two incidences of lacerated bladders at a New Hampshire hospital. The concern was that the small hospital lacked resources to help surgeons master the new technique. After a three-day training from Intuitive Surgical, each hospital is responsible for further training. Makary thinks haptic feedback might have prevented the injuries.

The Wall Street Journal report could not compare the injuries during da Vinci surgery to similar operations at other hospitals, stating that most hospitals don't disclose such data.

After a decade of da Vinci surgery and thousands of publications on it, there are no clinical trials and few population studies comparing complications from robot-assisted laparoscopy surgery, traditional laparoscopy, and open surgery.

Researchers at Harvard Medical School analyzed a large U.S. government database of medical billing records and found that for prostatectomy, the most common da Vinci procedure, both robot-assisted and traditional laparoscopic surgeries have fewer short-term complications than open surgery. However, the authors reported in the April 2012 Journal of Urology that using the robot for prostatectomy is more costly. They acknowledge their study is limited by its lack of long-term data and called for more comparisons.

“The robot has made it easier to do minimally invasive surgery,” says Jim Hu, the study’s lead author, now a urologist at UCLA. “While the robot is a great piece of technology, it’s still the surgeon that drives a lot of these outcomes,” Hu points out. The study also found no deaths associated with robotic surgery, an outcome that surprised Hu.

Makary remains wary of the purported benefits and costs of robotic surgery compared to traditional minimally invasive surgery. In the November 2011 Journal for Healthcare Quality, he examined robotic surgery claims on hospital websites. He found the majority of sites use content provided by Intuitive Surgical, Inc. In some cases, he concluded, a hospital's public statements may overstate the benefits of the da Vinci system.

“There’s something that doesn’t seem right about a monopoly in a surgical device,” Makary says.

Chris Simmonds, senior director for marketing services at Intuitive Surgical, responded via email to questions about the studies and the incidents reported by the Wall Street Journal: “It is not our position to comment on individual papers or stories.” Intuitive Surgical believes the robotic system's growth is patient-driven and that da Vinci surgery has been extensively studied, Simmonds wrote.

Another handy tool

While the surgical community debates the value of robotic surgery, even Makary sees a future for it—especially if the systems are better than standard laparoscopy, more portable, more flexible to use, and less expensive.

According to Sinanan, the Raven is a good example of what is possible with the next generation of surgical robots. The robot could be wheeled in and out of a procedure—laparoscopic or open—to augment the surgeon’s hands during challenging tasks. Robots would be another tool, like a scalpel or scissors, he says.

Rosen doesn't plan to bring the Raven to the market. But his lab is designing a new surgeon’s console that would be compatible with many different kinds of surgical robots. A prototype of this “surgical cockpit” will be ready in 2013. Rosen envisions hospitals buying surgical robots for different procedures and using a single console to operate them.

Those robots could evolve from research using the Ravens that Rosen and Hannaford introduced throughout the U.S. They and their fellow engineers aren't the only ones grasping the future of haptics, automation, and remote surgery, says Rosen: “Surgeons are extremely practical people. If you put something useful in their hands, they will find ways to use it that no one could think of before.”

Many aspects of surgical robotics are still in the fledgling stages of research. But with the Ravens released, surgeons and patients may start to see more new tools waiting in the wings.

Story ©2012 by Sarah Jane Keller. For reproduction requests, contact the Science Communication Program office.

Top

Biographies

Sarah Jane Keller Sarah Jane Keller

B.A. (biology, ecology concentration) University of Montana

M.S. (earth and planetary sciences) University of New Mexico

Internships: Los Alamos National Laboratory, Conservation magazine (Seattle)

Curious students in the dusty far reaches of New Mexico called me the "hawk lady." With a predatory bird on my arm, I described how food chains and watersheds work. In graduate school, a computer replaced my bird-nerd persona and my field biologist’s boots. My community, like those I had visited in Peru and Mexico, depended on mountain water sources. I was drawn to studying trends in the southwestern U.S. snowpack, a field rife with weighty implications—and controversies.

In time, I decided that showing raptors to kids, or revealing the difference between weather and climate to friends and family, had more personal and public impact than yet another paper in peer review. Instead of adding more results to the specialized literature, I will gladly spread science stories to the far-flung reaches of public discourse.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Natalia Wilkins Natalia Wilkins

B.A. (biology and art) Lake Forest College

Internship: Natural History Museum of Utah

Having grown up traveling and seeing the splendors of the natural world, I quickly became enamored with biology. My artwork as a result drew inspiration from that beauty. I have always looked for ways to combine my two passions, biology and art; when I learned of science illustration, I knew I had found the perfect career. My hope is to work for museums and illustrate field guides. To see more of my art, please visit my website.

Top |