Rediscovering the Fingerprint

Fingerprints form the backbone of forensic evidence, but how much weight can they bear? Tia Ghose examines the case. Illustrated by Cynthia Clark and Adriana Ocaña Lara.

Illustration: Cynthia Clark

Police officer Richard Gallagher noticed a man leering at Boston girls in 1997. Gallagher called out after the man, who ran. Gallagher chased him into a fenced-in yard, where the perp shot the cop twice. The culprit raced into a nearby house and held the owners at gunpoint. Then, he gulped down a glass of water and disappeared, leaving a single fingerprint behind.

Police matched that fingerprint to Stephan Cowans, a ne’er-do-well with a penchant for shoplifting but no violent history. The jury convicted Cowans of attempted murder and sentenced him to 35 to 40 years. But in 2004, a forensics lab lifted a small bit of DNA from the glass. The DNA didn’t match Cowans, and he was freed—after seven years in prison.

The Cowans case is just one of many tremors shaking the foundations of forensic science. For years, fingerprint examiners claimed no two fingerprints are alike and that a match proffers absolute certainty you’ve got the right crook. In the last several years, however, researchers have increasingly challenged that claim. A 2005 study in Science found 63% of all wrongful convictions were at least partly caused by bad forensic evidence. The issue gained momentum in February 2009, when a National Academy of Sciences (NAS) report found scientists had never tested many forensic techniques, including fingerprinting.

“With the exception of nuclear DNA analysis. . . no forensic method has been rigorously shown to have the capacity to consistently, and with a high degree of certainty, demonstrate a connection between evidence and a specific individual or source,” noted Harry T. Edwards, a U.S. Court of Appeals judge in Washington, D.C., and co-chair of the report, in a December 2009 presentation to the National Institute of Standards and Technology.

Several teams of researchers are trying to change that. Using a database of prints and a statistical model, one team found that some patterns are so common in the general population, or so smudged, that a foolproof match is unlikely. Other rare features make an ID ironclad.

Yet prosecutors have pushed back against efforts to quantify fingerprint error. They worry it will cripple their cases and make the rest of their forensic evidence seem shaky. Many judges, meanwhile, may not know the foundation for fingerprinting has been steadily eroding, and continue to allow fingerprint evidence in court.

Growing skepticism

Fingerprint identification got its start in British India in 1857. William Herschel, a bureaucrat overwhelmed by the day laborers who came to claim their day’s wages, suspected some people were cashing in twice or impersonating others. To keep fraud at bay, he took a handprint from every worker, and checked those prints when the laborer picked up payments.

Yet it wasn’t until 1910, when a print helped put Thomas Jennings away for murder in a Chicago trial, that fingerprinting gained acceptance in U.S. law. Several fingerprint experts took the stand and cited the 1892 book Finger Prints by Sir Francis Galton. Galton had studied print permanence and individuality, finding that prints are stable throughout life; even identical twins had slightly different patterns. The practice was considered reliable by the late 1920s.

Since then, experts have given a simple ‘yes’ or ‘no’ identification on a print, and IDs were almost never challenged. “Fifty years later, the argument was simply ‘This has been around for 50 years so there can’t be anything wrong,’" says criminologist Simon Cole of the University of California, Irvine, who criticizes fingerprinting’s lack of rigor.

For decades, the notion that every fingerprint was unique, and therefore incontrovertible proof of a positive ID, was taken for granted. But in a 1993 ruling, the Supreme Court clarified the rules for admitting evidence in federal courts, and many states followed suit. In Daubert v. Merrell Dow Pharmaceuticals, the justices rejected studies that linked a drug called Benedictin to birth defects, on the grounds that the study methods had never been established as reliable. “That invited defense attorneys to take a harder look at evidence that they’d basically been accepting for a long time,” though few took up the challenge, Cole says.

Then came the 2004 subway bombings in Madrid, Spain. The police lifted a partial print from one of the duffel bags that held the bombs. Dozens of countries scanned their databases, seeking a match. The FBI’s Integrated Automatic Fingerprint Identification System (IAFIS), which contains more than 3 billion prints, found a potential match to Oregon attorney Brandon Mayfield. The FBI sent the print to one of the most highly regarded fingerprint examiners, San Francisco-based forensic scientist Kenneth Moses.

Moses testified by phone that the Mayfield print positively matched the smudgy print on the duffel bag. A mere 20 minutes later, the Spanish police informed the FBI that they had found the real culprit: an Algerian man named Daoud Ouhnane, who had links to an Al-Qaeda inspired terrorist group.

Moses believes the mistaken ID was just a fluke, that the prints were freakishly similar. “The FBI came up with a print and it came up with a match of fifteen minutiae. Fifteen points? Hell, that’s pretty convincing,” he says. He also grouses about the fact that the Spanish authorities had other prints on the bag that would have ruled Mayfield out, but didn’t send them to Moses.

Moses, a salty, genial man who spent 15 years heading the San Francisco Police Department’s CSI unit, was no rookie. He’d investigated 18,000 crime scenes and more than 600 homicides, built the country’s first computer fingerprint databases, and laid the foundation for IAFIS.

That smudgy print fueled a wholesale re-evaluation of forensic evidence; the NAS study would not have been done without it, Moses says. The public snafu highlighted the contrast between what fingerprint examiners were saying—that their method was absolutely accurate—and the reality that a skilled practitioner had messed up.

The 2009 NAS report was scathing in its critique of the forensic sciences. For fingerprints, the report found no scientific studies backed the fingerprinting community’s assertion that a match can be made with absolute certainty. Many examiners were trained improperly or were simply incompetent. In some cases, labs required that applicants have just a “high school degree, and a pulse,” says Santa Cruz County fingerprint examiner Lauren Zephro.

|

Photo: Tia Ghose |

Santa Cruz County fingerprint examiner Lauren Zephro with a painting—made with the artist's fingerprints—of Sir Galton, the father of modern fingerprinting.

|

|

Labs were strapped for cash and scraped by on “whatever the police department was willing to throw to them,” says co-chair of the committee and Brown University biostatistician Constantine Gatsonis. And matches were plagued by bias: one study showed examiners who were told the prints came from someone in custody were likelier to assign a positive match.

“Fingerprints do provide some evidence, but there are plenty of cases where fingerprints provide misleading evidence,” Gatsonis says.

That’s troubling, because fingerprints are one of the most common pieces of physical evidence found at crime scenes. In one study of 4,000 cases, police found prints at 75% of homicide, 16% of burglary, and 20% of robbery crime scenes, reported criminal justice professor San Jose State University Joseph Peterson at a February 2010 conference on fingerprinting and the courts. Convictions occurred in about two-thirds of cases where the only physical evidence was prints, he noted.

Yet nobody knows how many wrongful convictions rely on faulty fingerprint evidence. Forensic examiners get about 0.8% of identifications wrong on their proficiency tests, according to a 1996 Forensic Identification study, but that number may be lower or higher than the true rate.

However, mistaken fingerprint identifications probably are more common than we think, Cole says. More cases have come to light since the Supreme Court's 1993 ruling. One or two fingerprint mismatches are uncovered every year, almost always in serious cases like homicide or rape. But with lives on the line, people fight harder, launch an army of lawyers, and spend years to find flawed forensic evidence. A burglar or other common criminal won’t bother with that endless, costly rigmarole.

Putting prints to the test

Since the study, a several researchers have begun measuring fingerprinting’s accuracy. Cédric Neumann of the UK’s Forensic Science Service, and Christophe Champod and colleagues at the University of Lausanne, Switzerland, have developed a method to calculate the chance that a print could be linked to one person, but by random chance another person could leave the same smudgy mark.

Champod’s team is interested in ID’ing the messy prints that are found in real crime scenes. A perp booked at the county jail presses his dusted palm down slowly, ensuring clean, undistorted lines. But a burglar who nicks the TV rarely places his fingers down slowly and evenly on the window ledge. Real prints are smudgy or twisted. Because skin can stretch, the spacing between ridges doesn’t match with the prints in the database, Champod says.

So his group has compiled a database of fingerprints from known sources and known distortions, such as pushing a finger up on a glass table-top, or twisting it sideways on a piece of a paper.

Using this information, the program layers over the candidate match print from a giant database from known criminals, like the FBI’s IAFIS. For each print, it determines whether the smudgy print from a crime scene could be the same as the clean one on file if it had been partway rubbed off or pressed onto a rough wall. If it can, the program goes on to see how solid the match really is.

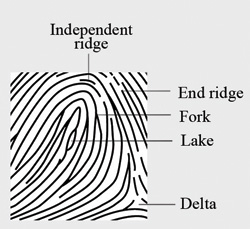

Champod also wanted to know which features are standouts and which are a dime a dozen. He trained 20 students to pick out the lines fingerprint examiners usually use to make a match. The team pored over 12,000 prints, and then marked points like “ending ridges” or “bifurcations” that would normally ID a print (see sidebar, Print This).

They fed the marked-up prints a computer, which compared all 12,000 prints against each other. For each set of traits, it has a numerical answer to the question: How rare are these features? Then, it spits out a probability. That reveals how often, by coincidence, you’d come up with a match that looked as good as this when you actually had the wrong guy.

Early results imply that court testimony is too black or white. Currently, a forensic scientist can only testify in court that a print matches a suspect, does not match, or that he or she doesn’t know one way or another.

“This ‘I don’t know’ is a huge grey area,” says examiner Glenn Langenburg of the Minnesota Bureau of Criminal Apprehensions, who is analyzing how the model can help guide examiners in making matches. Some of these grey-area ones are almost certainly a match, whereas others are almost certainly not, Langenburg says.

But in court those grey areas are treated identically. “That doesn’t represent the evidence fairly,” he says. On some of those tough calls, it’s likely that examiners make the wrong one.

Prosecutors push back

Prosecutors are reluctant to call the cornerstone of courtroom evidence into question. “If you’re getting away with saying you're perfect, why should you open your doors to research that, at very best, could say ‘you're almost as good as you say you are?’” says UCLA law professor Jennifer Mnookin, who is also working on a model to assess fingerprint error.

It could leave the legal community in limbo, says Cole. Judges will have to decide how fingerprint evidence is dealt with in court while scientists put the method through a ringer of tests, which could take years. “For those five years you don’t have fingerprint evidence. It also casts doubt on previous convictions with fingerprints,” he says.

Worse yet, it would weaken other forensic sciences, such as arson investigation, shoe print identification, bite marks, and firearms analysis. Most experts suspect these techniques are much less reliable than fingerprinting. “Once you admit the point for fingerprints you sort of have to do it for these other areas as well,” Cole says.

Testing the validity of fingerprinting in real court cases would be a huge bellyache, says Judge Gertner. If scientists reevaluated 300 convictions—ones that had gone through the entire appeals process—and found that in one case, an examiner made a wrong call or overstated uncertainty, “How could you keep that under wraps? It’s not ordinary science, somebody is going to jail on that,” she says.

Yet it may not actually set someone free. Most often, fingerprint testimony is more certain it should be, not overtly wrong, she says. “It’s not necessarily exonerating, it can just be undermining. I don’t think that it shouldn’t be done, but there are concerns.”

And after all the painstaking effort to give the technique a sheen of scientific credibility, juries may only get muddled. Indeed, studies have shown that juries don’t grasp statistics very well. “Right now the way we do it is all-or-nothing,” Langenburg says. “Just yes or no, keep it simple. As opposed to coming in with statistics, and throwing numbers at them like the DNA people.”

Examiners have been slow to embrace the models. The International Association for Identification, which has about 7,000 members, issued a defensive letter decrying the NAS report’s criticism. Some examiners are outright hostile, others mildly skeptical, but few are ready to toss out their gut instincts and rely on statistics. “The culture is not ready,” Champod says.

Moses thinks effort to pin down error rates with statistics is a fool’s errand. “When you’re dealing with nature, you’re dealing with a unique essence, a unique image, a unique thing,” he says. “You cannot use probability theory on something that’s a population of one.”

Instead, he says, the match process will always be subjective. ID’ing a print is more like recognizing the face of friend walking towards you from a distance. As he gets closer, your wonder, is that him? Could it be him? Then suddenly, you’re certain.

Moses sees this revelation when he’s in court. He will display two prints side to side, pointing out the ridges, the break in the lines, the similar pores and dots. “At some point you look over at the jury and they’re nodding their heads ‘yes.’ They’re comfortable it’s his prints,” he says.

The real room for improvement, he thinks, is in training forensic examiners. Most aren’t certified, and even the certified ones often pass unrealistically easy tests. “You have a police officer who spends most of their time on patrol, and someone tells them ‘you look at the fingerprints on your day off,’” Moses says.

To treat forensic evidence as science is to seek something uniform and repeatable. Yet, everything about the real world of cops and robbers is contingent, flawed, and chaotic. Fixing fingerprints may improve a tiny chunk of the criminal justice system, but in the end there’s so much else that can go wrong. That was certainly true for Stephen Cowan, that Boston ne'er-do-well. While a faulty fingerprint put detectives on his trail, mistaken witness ID’s and lab misconduct helped put him away. In the end, what did him in wasn’t a smudgy print on a glass, but a system that tries to make sense of the muck and very often fails.

Print This

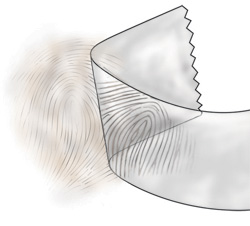

Lauren Zephro whisks a small brush back and forth, dusting black powder across a clear vial. An amoeba of smudges, previously invisible, appears. She rolls a line of clear tape onto the vial, then pulls it off in one swift motion. The black mass of prints transfers to the tape, which she then presses down onto a small index card. The prints are captured.

|

|

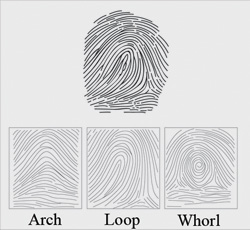

All sidebar illustrations: Adriana Ocaña Lara |

These hidden, or "latent," prints are made of body oils and sweat left behind when someone touches a surface. Despite all the gizmos people see on CSI, this process relies on the same simple tools it did a hundred years ago—a brush, a bit of black powder, a paper, and glorified scotch tape.

This process would normally be done at the site of a homicide or break-in. In a robbery, the police might dust the window ledge and little else. In a murder, they pull out all the stops, dusting everything in sight for prints.

Zephro does about 500 cases a year. “Burglaries are my bread and butter,” she says, although she’s also cracked a few cold homicides.

Once these cards come to Zephro, she leans down and looks into a loupe, the small magnifying glass that jewelers use to inspect diamonds. She first looks at the mess of black dust to see if she can pick out usable prints. “Latent prints are like fossils,” she says. “It’s pretty rare to find one perfectly preserved.” Though most look like fluffy clouds, she displays two partial impressions that have clear lines.

She focuses on one, picking out the general pattern. Lines that swoop in from one side of the print, circle around, and return are a loop. Lines that swerve up, then down, like a camel hump are an arch. And if the pattern seems to swirl in towards the center, it’s a whorl.

These groupings are enough to rule out an obviously wrong match, but not enough to make an ID. So she looks again. The staples of an ID are the “minutiae,” called ending ridges and bifurcations. Ending ridges are spots where the lines dead-end, while bifurcations are branches. She notes each one, scribbling descriptions in her case report.

Finally, she jots down “third level detail”—marks such pores, or places where the lines seem to widen or narrow. The hasty prints left at a crime scene are unlikely to have such details preserved, and they can often be a speck of dirt or an artifact of how someone pressed onto the surface.

She scans the print into the computer, then clicks on the print image in different spots, to show the computer which points she wants it to look for. Then she runs the database. A series of names pops up in the screen’s right corner, each with a score that can be anywhere between 0 and 9999, giving a rough estimate of how close the match is. The computer flags any matches above 900.

She pulls up the high scorer, with 1107. She points out the similar spots and calls it a match.

Story ©2010 by Tia Ghose. For reproduction requests, contact the Science Communication Program office.

Top

Biographies

Tia Ghose Tia Ghose

B.S. (mechanical engineering) University of Texas at Austin

B.A. (Plan II) University of Texas at Austin

M.S. (bioengineering) University of Washington

Internship: Milwaukee Journal-Sentinel (Kaiser Family Foundation health reporting internship program)

“Maybe you should stick to theory,” my undergraduate advisor suggested as he scanned my thesis. I had flailed at the microscope and the metal polisher for months, so he was surprised by how clearly I’d described the metal’s behavior.

I took his advice and modeled bacteria. But while I loved the mystery, I was less enthralled with the drudgery of debugging computer code and my tiny scientific domain. I was mastering Escherichia coli but missing the world. So I slipped out of the lab and started writing about it.

My advisor saw my omnivorous curiosity and my writing skill, but he couldn’t fit those pieces together. Science writing has done that. I can learn how metals crystallize or why bacteria stick, without sticking to any one thing.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Cynthia Clark Cynthia Clark

B.A. (biology) University of California, Santa Barbara

I've loved biology and art for as long as I can remember. Above all, I love that no matter how much I learn about the natural world, there's still an inexhaustible supply of even more amazing things to discover.

I was thrilled to find out about science illustration as a vocation that would allow me to combine two lifelong passions and to share knowledge with a wide audience. The Science Illustration Program has been a challenging and enlightening experience that has opened many new possibilities for me. I am particularly fond of mammals and am holding a baby wombat in my photo.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Adriana Ocaña Lara Adriana Ocaña Lara

B.A. (coastal marine biology) University of Puerto Rico, Humacao

Internships: University of Puerto Rico, Río Piedras; Mote Marine Laboratory, Florida

Since childhood, I have been taught to love nature and the arts. And they both have become the passions of my life. Living in an island and in the Caribbean is my inspiration, enjoying the bright colors and the big range of tropical species in this part of the world. It has been a great opportunity to be part of the Science Illustration Program where I could share my interests in science and art with others like me.

Top |